The origin story for superhero comic books rests in the 1930s and 1940s, but those creators remained in relative obscurity, often with little or no financial reward. However, the 1980s and 1990s ushered in an era of comic book creators as superstars.

One of the most iconic and influential superstars from that period was Frank Miller, who built his comic book capital on a staple of the industry—the reboot.

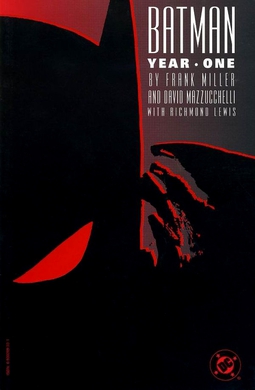

Miller reimagined the canon for and resurrected Daredevil (Marvel) as well as Batman (DC). Some argue that his work on Batman: Year One (with David Mazzucchelli) and The Dark Knight Returns (with Klaus Janson and Lynn Varley) is among the best in the history of superhero comic books.

Miller’s artwork also proved to be a visually impressive source for film—notably his Sin City and 300.

Superstardom for Miller hasn’t avoided stumbles (his script for RoboCop 2) or controversy, as Sean Howe detailed in 2014:

But, as if Miller were one of his own antiheroes, his stark individualist philosophy has also led him down some lonely corridors. He’s written graphic novels that many of his fans recoil from—including one that WIRED called “one of the most appalling, offensive, and vindictive comics of all time.” And he followed that up with ferocious online musings that provoked an outcry, even from some of his most stalwart supporters. In recent years, he’s withdrawn from the public eye.

One of the newest renditions of Miller’s work has itself been mostly hidden from the public eye: Miller’s The Man without Fear and his “Born Again” arc as source material for Netflix’s now cancelled Daredevil series.

The Many Universes of Superheroes: Netflix’s Miller Lite Adaptation

While rebooting characters and entire universes became a standard convention of comic books at Marvel and DC, the adaptation of superheroes from print to film sputtered throughout the 1970s, 1980s, and into the 1990s.

Marvel has mastered the film adaptation, and many in the public are far more familiar with the film Marvel Universe than the many universes of the comic books. Concurrent with the feature film success of Marvel and struggles with DC-based films other than Batman, Netflix launched serialized superhero adaptations in conjunction with Marvel: Jessica Jones, Daredevil, Luke Cage, Iron Fist, The Defenders, and The Punisher.

These adaptations, I thought, held much greater potential than feature films; they matched the current generation’s lust for binge watching, but they also maintained one of the most compelling features of comic books, extended serialization.

The Netflix approach was well-suited to Jessica Jones since the adaptations downplay some of the main conventions of superhero comic books, such as elaborate and identifiable superhero costumes.

To their credit, Netflix adaptations have been character driven, often as much about the everyday person as the superhero alter-ego.

Season 1 of Daredevil traveled that muted approach to superheroes, and found the perfect source in Miller’s arc, later published as a graphic novel, The Man without Fear, written by Miller with dynamic artwork from John Romita Jr. (pencils) and Al Williamson (inks).

This first season follows a softened and tweaked Miller narrative and draws significantly from Romita Jr.’s art, notably the black non-costume Matt Murdock dons in most of the season:

While I have examined The Man without Fear and its relationship with the Netflix series [1], I want below to look at Season 3 and the use of the “Born Again” arc as more Miller Lite.

Daredevil Born Again, and Again

The “Born Again” arc (Daredevil vol. 1, issues 227-231, and often including 232-233) features Miller and Mazzucchelli, who also paired on Batman: Year One. This storyline builds on the rebooted Daredevil fashioned by Miller and includes some powerful religious imagery and themes.

Daredevil as a mythology and narrative has survived, I think, like other major superheroes because in its essence that mythology has compelling elements—structural justice versus vigilante justice, tensions surrounding the idea that “justice is blind,” etc. However, the serial rebooting of the character and the adaptations of the comic book medium into feature films and serialized filmed formats suggest at least that these essential elements have not in some real way been fulfilled.

This is where the differences between the source material and the adaptation come into play. Netflix’s S3 of Daredevil uses “Born Again” as the primary frame, as S1 used The Man without Fear. But S3 also pulls directly and loosely from other sources in the comic book universes as well.

Jesse Schedeen offers 9 changes made in S3 to the comic book sources:

- Schedeen focuses on Karen Page’s role in Wilson Fisk/Kingpin discovering Matt Murdock is Daredevil; Karen is manipulated into revealing Murdock’s secret in “Born Again” because Miller has reimagined her as a drug addict and failed-actress-turned-porn-performer. I want to add and emphasize here that the Netflix version of Karen is an important shift from Miller’s trite and reductive Karen. Netflix’s adaptation has clearly sought ways to keep Karen flawed (her backstory revealed in S3 is brutal and dark) but maintain a far more complex and fully human character than Miller has allowed. Like Matt, Karen feels a great deal of guilt and self-loathing in S3, but this adaptation resists a common flaw in comic book narratives to reduce women to one dimension.

- Another change involves pulling from a different source, “Guardian Devil” from 1998, as Schedeen notes. This change fits into my point above, I think, in that S3 character Benjamin “Dex” Poindexter (an adaptation of the Marvel character Bullseye) kills Father Lantom instead of Karen. Again, I see these changes allowing a richer and more complex version of Miller’s Karen Page and the wider Daredevil contemporary canon (in this case crafted by Kevin Smith and Joe Quesada).

- S3 maintains the “Born Again” reveal of Matt Murdock discovering Sister Maggie is his mother, as Schedeen details, developing more tension in the adaptation version.

- The teasing out of Wilson Fisk/ Dex (Bullseye) and another assassin, Nuke, between “Born Again” and S3 demonstrates how the Netflix series often streamlines source narratives and characters while also in many ways blunting superhero elements.

- One of the most distinct differences is the use of Dex, and dropping the name “Bullseye” as well as the superhero uniform, in S3. Netflix’s adaptation has chosen to emphasize Dex as mentally unstable, paralleling, I think, in many ways the motif throughout the series concerning childhood trauma (shared by Dex, Fisk, and Murdock) and authority conflicts—the parent/child pattern seen also with Karen.

- The paralysis of Bullseye is shared between S3 and the comic book source, and as the Netflix S3 ends, Dex’s surgery clearly was designed to propel the series into another season.

- One of the key characters in the Daredevil myth is Foggy, and the Netflix version also develops from the foundational source character into a more complex and even realistic person, a necessary change, I think, in terms of how Foggy parallels Karen as they interact with Matt.

- Fisk’s love interest, Vanessa, proves to be another interesting adaptation in S3, much like the changes made with Karen. As Schedeen explains, “In the comics, though, Vanessa has a much more complicated relationship with her husband and his criminal empire.” Here, I think, the viewer of S3 is forced to consider Vanessa as a more fully human and independent character, again in similar ways to how we view Karen. In comic books, as in literature, women are often reduced to being merely symbolic or muses for men as heroes, or villains.

- Similar to Dex (Bullseye), Fisk (Kingpin) is essentially drawn from the comic book Marvel universe, and “Born Again,” but the superhero/villain elements are greatly muted. The “Born Again” Kingpin projects the sort of large ego we see in S3, but the fights and outcome for Fisk vary substantially in the adaptation. Schedeen adds, “Fisk doesn’t suffer quite so resounding a defeat in ‘Born Again.’ He does overplay his hand in his attempts to destroy Matt Murdock, eventually causing the deaths of dozens of Hell’s Kitchen residents when he unleashes the out-of-control Nuke.”

With the Netflix run of Daredevil finished, in midstream, we can see how Miller’s version has provided a powerful and compelling frame for the adaptation. But we should also recognize the potential and purpose of adaptation from one medium to another.

The Netflix series as Miller Lite presents an important argument for the urge in the comic book universe to reboot and retell. Daredevil as a foundational superhero myth has extremely important characters, motifs, and themes, but too often the array of creators positioned to soar with those elements has tended to flutter, falter, and even fail.

S1 of Daredevil was exciting in its potential, even as I found the filming too dark (although the dark tendency of the comic book with some artists, such as Alex Maleev, has been among my favorite qualities). By S3 and the abrupt end, I was increasingly hopeful that this adaptation was working its way in the right direction.

While episode 13 of S3 charged viewers with Matt’s “man without fear” speech at Father Lantom’s funeral, we are left once again with less than we had hoped for.

[1] Thomas, P.L. (2019). From Marvel’s Daredevil to Netflix’s Defenders: Is justice blind? In S. Eckard (ed.), Comic connections: Building character and theme (pp. 81-98). New York, NY: Rowman and Littlefield; Thomas, P.L. (2012). Daredevil: The man without fear; Elektra lives again; science fiction. [entries]. In Critical Survey of Graphic Novels: Heroes and Superheroes. Pasadena, CA: Salem Press.